This reserved knowledge isknown as the validation information set, or validation set. For instance, asmall, randomly selected portion from a given training set could be usedas a validation set, with the rest used because the true training set. The mannequin is unable to identify the prevailing trend in the coaching dataset. In contrast to overfitting, underfitted models have excessive bias and low variance of their predictions. When becoming a mannequin, the aim is to find the “sweet spot” between underfitting and overfitting in order that a dominant pattern may be established and applied generally to new datasets. On the opposite hand, if a machine studying model is overfitted, it fails to perform that well on the take a look at overfitting vs underfitting in machine learning information, versus the training data.

Strategies To Scale Back Overfitting

If you’re overfeeding, strive decreasing the amount of the feeds and/or dietary supplements in the food regimen. But keep in mind if you improve a feed an excessive amount of you could just make your horse fats, which means this isn’t a great option… what works on this situation? Your first task with FeedXL should be to assess your horse’s present diet. It’s REALLY necessary to meet your horse’s needs for all of those nutrients! Leverage new technologies, foster a data-driven tradition, and improve efficiency.

The Complete Guide On Overfitting And Underfitting In Machine Studying

However, the check data solely includes candidates from a specific gender or ethnic group. In this case, overfitting causes the algorithm’s prediction accuracy to drop for candidates with gender or ethnicity exterior of the take a look at dataset. The key to avoiding overfitting lies in putting the best steadiness between mannequin complexity and generalization functionality. It is crucial to tune fashions prudently and never lose sight of the mannequin’s final goal—to make accurate predictions on unseen information. Striking the right stability can outcome in a strong predictive mannequin capable of delivering accurate predictive analytics. Due to its excessive sensitivity to the training information (including its noise and irregularities), an overfit mannequin struggles to make accurate predictions on new datasets.

11FourFour Linear Function Fitting (underfitting)¶

Feature selection—or pruning—identifies crucial features within the training set and eliminates irrelevant ones. For instance, to predict if an image is an animal or human, you can have a look at various enter parameters like face form, ear place, body structure, etc. Regularization Regularization is a group of training/optimization methods that search to reduce overfitting. These methods attempt to get rid of those factors that don’t impression the prediction outcomes by grading features based mostly on importance. For instance, mathematical calculations apply a penalty value to features with minimal impact. Consider a statistical model attempting to predict the housing prices of a city in 20 years.

They will not be geared up to deal with the complexity of the information they encounter, which negatively impacts the reliability of their predictions. Consequently, the mannequin’s performance metrics, such as precision, recall, and F1 score, can be drastically lowered. In a business situation, underfitting could lead to a model that overlooks key market developments or customer behaviors, resulting in missed opportunities and false predictions. Similarly, underfitting in a predictive model can lead to an oversimplified understanding of the information. Underfitting typically occurs when the mannequin is merely too simple or when the number of features (variables utilized by the model to make predictions) is just too few to characterize the data precisely.

When attempting to achieve greater and extra complete results and cut back underfitting it’s all about growing labels and process complexity. Bagging, on the opposite hand, is a special strategy for organizing knowledge. This process entails coaching a lot of strong learners in parallel and then combining them to improve their predictions. A lot of folks discuss about the theoretical angle however I feel that’s not enough – we have to visualize how underfitting and overfitting truly work. Well-known ensemble methods embody bagging and boosting, which prevents overfitting as an ensemble model is made from the aggregation of a number of fashions. Another possibility (similar to data augmentation) is including noise to the enter and output knowledge.

Unless stated in any other case, we assume that each the coaching set and thetest set are drawn independently and identically drawn from the samedistribution. This signifies that in drawing from the distribution there isno memory between attracts. Obvious circumstances where this could be violatedis if we need to build a face recognition by coaching it on elementarystudents and then need to deploy it in the basic population. This isunlikely to work since, well, the students tend to look fairly from thegeneral inhabitants. By coaching we try to discover a operate that doesparticularly well on the coaching knowledge.

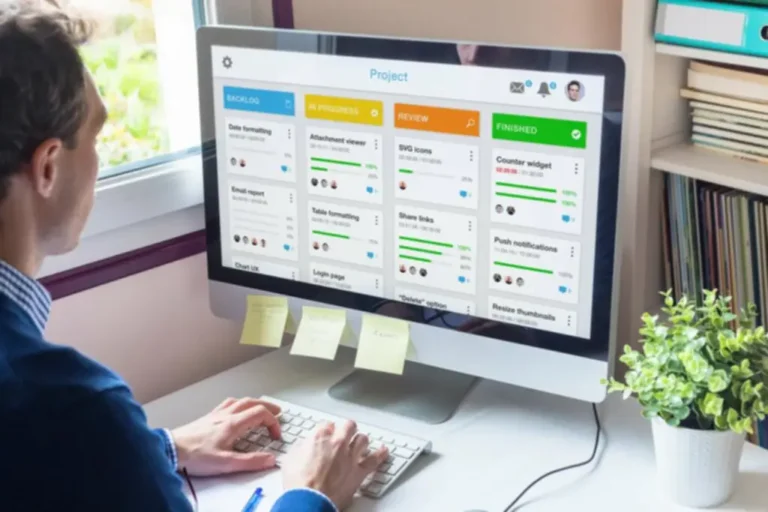

Then, we iteratively practice the algorithm on-1 folds while utilizing the remaining holdout fold because the test set. This methodology allows us to tune the hyperparameters of the neural community or machine learning model and test it utilizing fully unseen data. In machine learning we usually choose our mannequin based mostly on an evaluationof the performance of several candidate fashions. The candidate fashions may be comparable models usingdifferent hyper-parameters. Using the multilayer perceptron as anexample, we will choose the number of hidden layers in addition to the numberof hidden items, and activation capabilities in each hidden layer.

On the other hand, overfit fashions expertise high variance—they give correct results for the training set however not for the check set. Data scientists goal to find the sweet spot between underfitting and overfitting when becoming a model. A well-fitted mannequin can rapidly set up the dominant pattern for seen and unseen data sets. A greater order polynomial function is more complicated than a decrease orderpolynomial operate, for the explanation that higher-order polynomial has moreparameters and the model function’s choice range is wider. Therefore,utilizing the same training data set, greater order polynomial functionsshould be in a position to achieve a decrease training error price (relative to lowerdegree polynomials). Bearing in mind the given training knowledge set, thetypical relationship between mannequin complexity and error is shown in thediagram below.

Here generalization defines the flexibility of an ML model to supply a suitable output by adapting the given set of unknown enter. It means after offering coaching on the dataset, it can produce reliable and correct output. Hence, the underfitting and overfitting are the two phrases that need to be checked for the performance of the mannequin and whether or not the model is generalizing well or not. While it might seem counterintuitive, including complexity can enhance your mannequin’s capacity to deal with outliers in knowledge. Additionally, by capturing extra of the underlying information points, a posh mannequin could make extra accurate predictions when introduced with new data points.

- This overfit mannequin might battle to make accurate predictions when new, unseen information is introduced, particularly in periods of market volatility.

- For example, mathematical calculations apply a penalty worth to options with minimal influence.

- But the primary trigger is overfitting, so there are some ways by which we are ready to cut back the occurrence of overfitting in our mannequin.

- In this case, overfitting causes the algorithm’s prediction accuracy to drop for candidates with gender or ethnicity exterior of the check dataset.

Overfitting is an occasion when a machine learning mannequin learns and takes under consideration excessive information than necessary. It includes data noise and other variables in your training knowledge to the extent that it negatively impacts the performance of your mannequin in processing new information. There is such an overflow of irrelevant information that impacts the actual coaching information set.

The VC dimension of a classifier is just the largest number of points that it’s capable of shatter. Consider a mannequin predicting the chances of diabetes in a population base. If this mannequin considers information points like income, the variety of times you eat out, food consumption, the time you sleep & wake up, gym membership, etc., it might ship skewed results. As we will see from the above diagram, the model is unable to seize the info points present in the plot. It’s crucial to recognize both these issues while building the model and take care of them to enhance its performance of the mannequin.

Due to time constraints, the first baby only learned addition and was unable to learn subtraction, multiplication, or division. The second child had a phenomenal memory however was not excellent at math, so instead, he memorized all the problems in the issue book. During the exam, the primary youngster solved solely addition-related math problems and was not able to tackle math issues involving the opposite three basic arithmetic operations. On the opposite hand, the second baby was solely able to solving issues he memorized from the math problem e-book and was unable to reply another questions. In this case, if the mathematics examination questions were from another textbook and included questions associated to all types of primary arithmetic operations, each children would not manage to move it.

Transform Your Business With AI Software Development Solutions https://www.globalcloudteam.com/